May 26, 2023

Kaz Sato

Developer Advocate, Google Cloud

Ivan Cheung

Developer Programs Engineer, Google Cloud

Many people are now starting to think about how to bring Gen AI and large language models (LLMs) to production services. You may be wondering "How to integrate LLMs or AI chatbots with existing IT systems, databases and business data?", "We have thousands of products. How can I let LLM memorize them all precisely?", or "How to handle the hallucination issues in AI chatbots to build a reliable service?". Here is a quick solution: grounding with embeddings and vector search.

What is grounding? What are embedding and vector search? In this post, we will learn these crucial concepts to build reliable Gen AI services for enterprise use. But before we dive deeper, here is an example:

Semantic search on 8 million Stack Overflow questions in milliseconds. (Try the demo here)

This demo is available as a public live demo here. Select "STACKOVERFLOW" and enter any coding question as a query, so it runs a text search on 8 million questions posted on Stack Overflow.

The following points make this demo unique:

-

LLM-enabled semantic search: The 8 million Stack Overflow questions and query text are both interpreted by Vertex AI Generative AI models. The model understands the meaning and intent (semantics) of the text and code snippets in the question body at librarian-level precision. The demo leverages this ability for finding highly relevant questions and goes far beyond simple keyword search in terms of user experience. For example, if you enter "How do I write a class that instantiates only once", then the demo shows "How to create a singleton class" at the top, as the model knows their meanings are the same in the context of computer programming.

-

Grounded to business facts: In this demo, we didn't try having the LLM to memorize the 8 million items with complex and lengthy prompt engineering. Instead, we attached the Stack Overflow dataset to the model as an external memory using vector search, and used no prompt engineering. This means, the outputs are all directly "grounded" (connected) to the business facts, not the artificial output from the LLM. So the demo is ready to be served today as a production service with mission critical business responsibility. It does not suffer from the limitation of LLM memory or unexpected behaviors of LLMs such as the hallucinations.

-

Scalable and fast: The demo gives you the search results in tens of milliseconds while retaining the deep semantic understanding capability. Also, the demo is capable of scaling out to handle thousands of search queries every second. This is enabled with the combination of LLM embeddings and Google AI's vector search technology.

The key enablers of this solution are 1) the embeddings generated with Vertex AI Embeddings for Text and 2) fast and scalable vector search by Vertex AI Matching Engine. Let's start by taking a look at these technologies.

First key enabler: Vertex AI Embeddings for Text

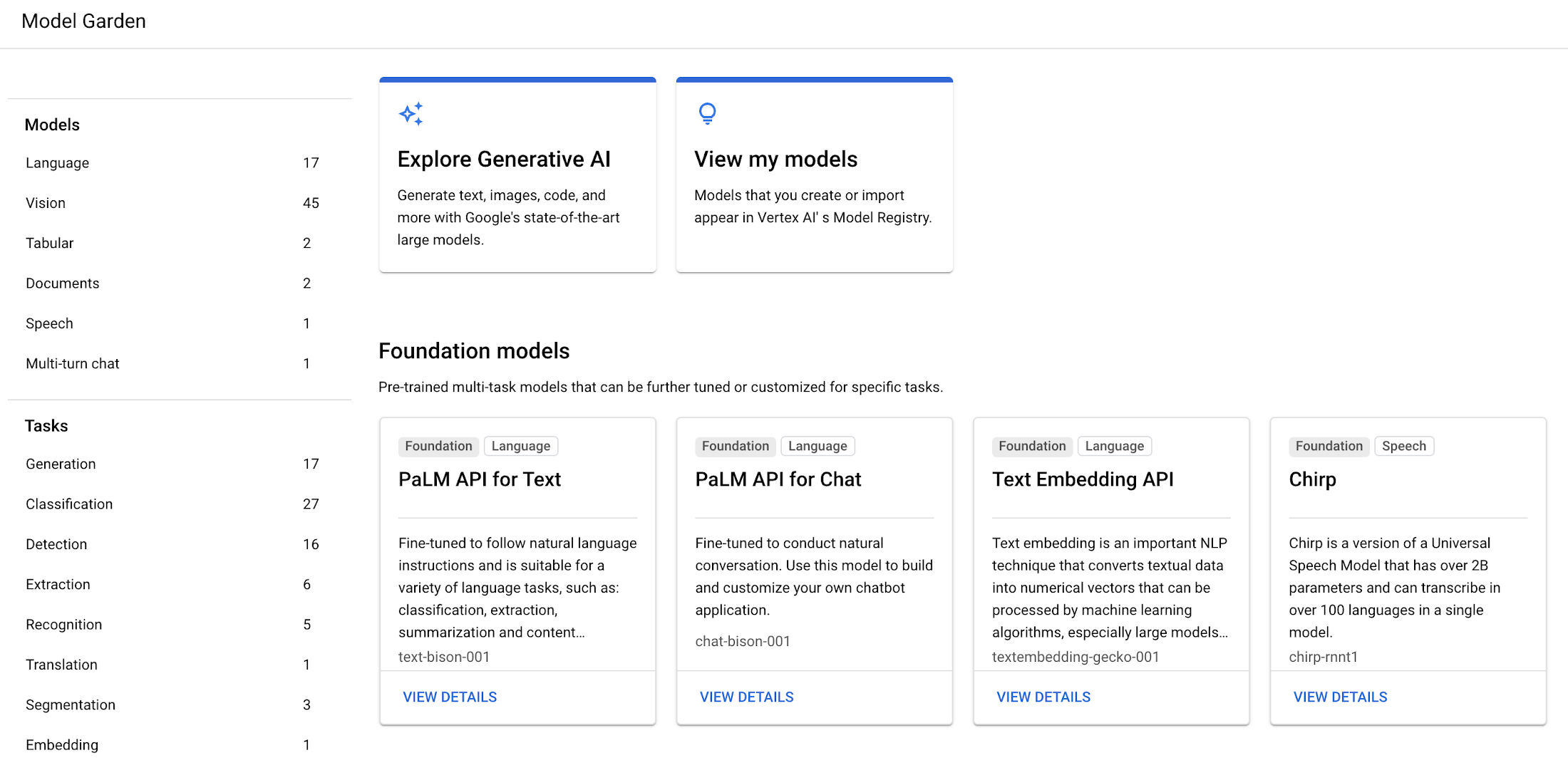

On May 10, 2023, Google Cloud announced the following Embedding APIs for Text and Image. They are available on Vertex AI Model Garden.

-

Embeddings for Text : The API takes text input up to 3,072 input tokens and outputs 768 dimensional text embeddings, and is available as a public preview. As of May 10, 2023, the pricing is $0.0001 per 1000 characters (the latest pricing is available on the Pricing for Generative AI models page).

-

Embeddings for Image: Based on Google AI's Contrastive Captioners (CoCa) model, the API takes either image or text input and outputs 1024 dimensional image/text multimodal embeddings, available to trusted testers. This API outputs so-called "multimodal" embeddings, enabling multimodal queries where you can execute semantic search on images by text queries, or vise-versa. We will feature this API in another blog post soon.

In this blog, we will explain more about why embeddings are useful and show you how to build and an application leveraging Embeddings API for Text. In a future blog post, we will provide a deep dive on Embeddings API for Image.

Embeddings API for Text on Vertex AI Model Garden

What is embeddings?

So, what are semantic search and embeddings? With the rise of LLMs, why is it becoming important for IT engineers and ITDMs to understand how they work? To learn it, please take a look at this video from a Google I/O 2023 session for 5 minutes:

Also, Foundational courses: Embeddings on Google Machine Learning Crush Course and Meet AI's multitool: Vector embeddings by Dale Markowitz are great materials to learn more about embeddings.